Reflections on Reaching 1 Million People on Stackoverflow

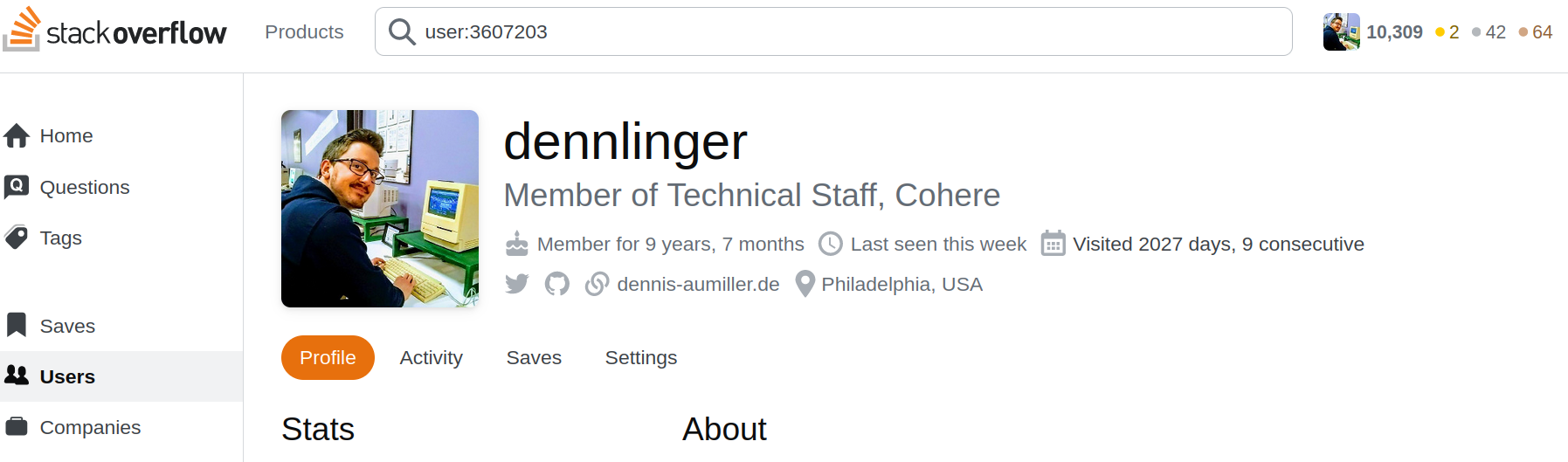

This week, I have officially reached more than 1 million people on the website stackoverflow.com! I wanted to take a moment to reflect on this “achievement”, what it means for my professional career, and why I simultaneously believe that it is sheer luck (and a LOT of procrastination) that got me here.

As always, if you have any questions or comments, feel free to message me at dennis.aumiller@gmail.com or reach out on Twitter.

Discovery of the New Cohere Summarization Endpoint

Two weeks ago, Cohere.ai announced their new dedicated summarization endpoint!

For someone currently doing their PhD on text summarization, this is both worrying, but obviously also a rather intriguing development: while recent advancements have been focusing on rather broadly applicable models (think, chatGPT), providing more task-specific alternatives seems to be the niche that Cohere is carving out for themselves.

Adding to the surprise of seeing a dedicated summarization endpoint is the fact that text summarization is really hard; in the last 50 years, a lot of progress has been made, but our current state-of-the-art models still suffer from annoying problems such as correctly retaining factuality of the input text.

Another problem is the actual definition of “summaries” in different domains. Methods for generating a “good” summary of a news article are generally useless when it comes to generating the summary of a court ruling, or generating radiology reports from doctor notes.

Due to the combination of these (and other) factors, there are comparatively few productive settings in which summarization is actively used. To my knowledge, the two main applications using some form of summarization right now are some news aggregators, summarizing information from multiple news articles (which primarily uses extractive methods, meaning directly copying existing sentences from the input documents), as well as the recently introduced “Document TL;DR” generator in Google Docs (the latter using a variant of their own PEGASUS neural model).